Artificial intelligence is no longer a future concept in health and safety. It is already being used to identify risk, predict incidents, monitor behaviour, and surface insights that traditional systems simply cannot.

But as interest in AI in health and safety accelerates, so does confusion.

Many organisations are asking the same questions:

What does AI actually do in a safety context?

Where does it add real value - and where does it create new risk?

How do PCBUs adopt AI responsibly without outsourcing judgment or accountability?

These questions sit at the heart of Season 5, Episode 3 of the Health and Safety Unplugged podcast, where I sat down with Tane van der Bloon from Inviol to explore what AI means for real-world safety systems - not theory, not hype, and not vendor-driven promises.

This article breaks down the key themes from that conversation and places them in a practical, New Zealand context.

The Role of AI in Health and Safety Today

AI in health and safety supports better decision-making by analysing data at scale, identifying patterns humans miss, and highlighting risk earlier - but it does not replace professional judgment or PCBU accountability.

AI tools are increasingly used to:

analyse large volumes of safety data

detect trends across incidents, near misses, and observations

support earlier intervention before harm occurs

provide consistency where manual systems struggle

In the podcast, Tane makes a critical distinction: AI is most powerful when it augments human capability, not when it attempts to replace it.

This framing matters. Under the Health and Safety at Work Act 2015, duties cannot be delegated to software. AI can inform decisions, but responsibility always remains with the PCBU.

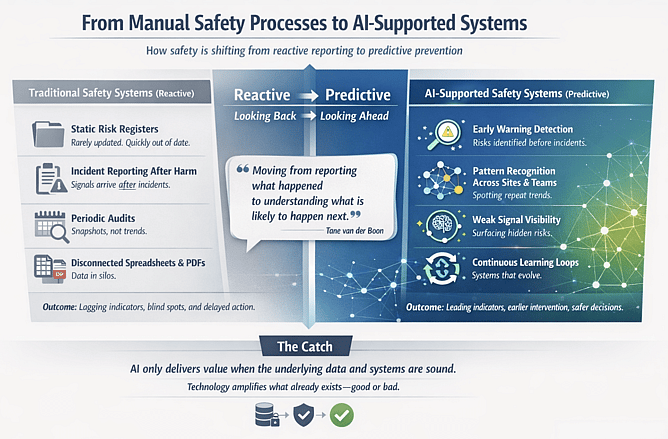

From Manual Safety Processes to AI-Supported Systems

Most organisations still rely heavily on manual safety processes:

static risk registers

incident reporting after harm has occurred

periodic audits that offer snapshots, not trends

spreadsheets and PDFs that do not talk to each other

AI changes this by shifting safety systems from reactive to predictive.

Instead of waiting for incidents to signal failure, AI-supported platforms can:

identify early warning signs

highlight repeat patterns across sites or teams

surface weak signals that would otherwise remain invisible

In the interview, Tane describes this as moving from “reporting what happened” to understanding what is likely to happen next.

That shift is significant - but only when the underlying data and systems are sound.

Why AI in Health and Safety Is Gaining Momentum

AI adoption in health and safety is accelerating for several reasons.

Predictive Risk Management Using AI in Health and Safety

AI enables earlier identification of risk by analysing trends across incidents, behaviours, and conditions before harm occurs.

Traditional systems tend to focus on lag indicators. AI allows organisations to interrogate lead indicators at scale.

This is particularly relevant in complex environments where:

work changes frequently

contractors are involved

risk exposure varies day to day

manual oversight has limits

Real-Time Monitoring and AI in Health and Safety Systems

AI-powered tools increasingly support real-time insights through:

sensor data

digital permits

behavioural observation platforms

operational system integration

However, as discussed in the podcast, real-time data is only valuable if there is governance around how it is used.

More data does not automatically mean better safety outcomes.

Reducing Human Error Without Removing Human Judgment

AI can reduce cognitive load and inconsistency, but it cannot replace contextual understanding or ethical decision-making.

One of the strongest insights from the conversation with Tane is that AI does not remove error - it changes where error occurs.

If inputs are flawed, biased, or incomplete, AI will scale those weaknesses.

This is why AI must sit within:

clear decision-making frameworks

defined escalation pathways

competent human oversight

Cost Efficiency and Resource Allocation

AI can reduce administrative burden by:

automating analysis

prioritising issues that matter most

reducing time spent searching for insights

That efficiency is attractive - but only when it supports better control selection, not just faster reporting.

Compliance and AI in Health and Safety

AI can assist with:

identifying gaps against expected standards

highlighting misalignment between practice and documentation

supporting internal assurance processes

What it cannot do is create compliance by default.

As reinforced in the podcast, regulators assess outcomes, decisions, and systems - not software adoption.

AI in Health and Safety Requires Governance, Not Just Technology

One of the most important themes in the interview is governance.

Before adopting AI tools, organisations should be able to answer:

What decisions will AI inform?

What decisions will always remain human-led?

How are outputs validated?

How is bias managed?

How is data quality assured?

Without these answers, AI risks becoming:

a false sense of security

an unchallenged authority

a compliance blind spot

This is where many early adopters stumble.

Expert Insight: Tane van der Bloon on AI and Safety Systems

Tane brings a grounded perspective shaped by operational reality, not Silicon Valley optimism.

A recurring message throughout the episode is this:

“AI is only as good as the system it sits within.”

Strong safety outcomes still rely on:

well-designed risk frameworks

clear accountabilities

effective application of the hierarchy of controls

leadership engagement

AI enhances those systems - it does not replace them.

Where AI Fits - and Where It Does Not

AI adds the most value when:

data is consistent and reliable

risk is dynamic and complex

early intervention matters

insights are acted on

AI adds the least value when:

organisations are trying to compensate for weak fundamentals

documentation does not reflect reality

leadership is disengaged

risk controls rely on behaviour alone

This distinction is critical for business leaders considering adoption.

AI in Health and Safety and PCBU Accountability

Using AI does not reduce PCBU duties. It increases the expectation that risks are understood and managed proactively.

If AI surfaces risk indicators and those indicators are ignored, accountability does not disappear.

In fact, it may increase.

This reinforces the need for AI to be embedded into decision-making processes - not bolted on as a reporting tool.

Common Questions About AI in Health and Safety

Is AI required to meet HSWA obligations?

No. AI is not required, but organisations must manage risk so far as reasonably practicable. AI may support that duty where appropriate.

Can AI replace safety advisors or consultants?

No. AI supports analysis, not professional judgment, leadership, or accountability.

Is AI suitable for all industries?

Not always. High-variability, data-rich environments tend to benefit most

Does AI improve safety culture?

Only when paired with leadership, engagement, and good system design.

Watch the Full Conversation: Health and Safety Unplugged

This article only scratches the surface.

In Season 5, Episode 3 of Health and Safety Unplugged, Tane van der Bloon and I explore:

where AI genuinely improves safety outcomes

where organisations are getting it wrong

what responsible adoption looks like in practice

If you are considering AI in your safety system, this conversation will help you separate value from noise.

Final Thought: AI Is a Tool, Not a Strategy

AI will continue to shape health and safety practice in New Zealand.

But strong outcomes will always depend on:

system design

leadership decisions

practical application of controls

willingness to reassess assumptions

AI can enhance those foundations - but it cannot replace them.

About the author

This article was written by Matt Jones, a HASANZ-registered health and safety professional and founder of Advanced Safety.

Matt advises New Zealand business leaders on practical health and safety system design, HSWA compliance and risk management, with a focus on construction, manufacturing, and complex operational environments. He is also the host of the Health and Safety Unplugged podcast, where he speaks with industry leaders about real-world safety challenges and emerging practice.

Advanced Safety is a New Zealand-based workplace health and safety consultancy providing compliance audits, ISO 45001-aligned systems, risk assessments, and ongoing safety management support for construction, manufacturing and commercial businesses.

Links:

Matt Jones – LinkedIn

Advanced Safety – https://www.advancedsafety.co.nz/

Health and Safety Unplugged (YouTube) – https://www.youtube.com/@advancedsafety